28 Disadvantages of Closed-Ended Survey Questions Explained

Explore the disadvantages of closed-ended survey questions with 25 sample items revealing biases, limitations, and best practices for better surveys.

Disadvantages of Closed-Ended Survey Questions

Closed-ended survey questions are the darlings of data enthusiasts everywhere. Think Likert scales, multiple-choice checkboxes, and those classic yes/no options—survey designers cherish them for their laser focus and speed. That speed comes at a cost. While these questions help researchers, product teams, HR folks, and marketers make sense of sprawling feedback, there are hidden pitfalls lurking beneath the convenience. In this article, you’ll uncover the subtle—and not-so-subtle—flaws of closed-ended questions, see where and how each question type falls short, and even discover some clever workarounds. If you’ve ever wondered why your survey data feels “off” or too shallow, read on for clear answers, actionable tips, and real-world samples.

Key Limitations Common to All Closed-Ended Formats

Let’s pause and look at the big picture. Closed-ended questions carry some universal disadvantages that can sabotage even the slickest survey.

For starters, response bias is always lurking. That’s when participants nudge their answers in ways that please the survey creator—or society at large. Closed-ended formats make it easy for this bias to sneak in, whether by choosing “agree” to sound positive, or just rushing through checkboxes without thought.

Next, loss of rich insights is a major concern. When you box respondents into pre-set answers, you miss all those witty explanations or oddball complaints that only open-text responses can reveal. It’s the survey equivalent of asking if someone likes pizza and never hearing about their pineapple allergy.

Limited nuance is another issue. Complex emotions and motivations just don’t fit neatly in a five-point scale or a single checkbox. You’ll know “how satisfied” someone claims to be, but not why, or what would actually win their loyalty.

There's also the problem of forced categorization, where people must pick the “least wrong” option instead of one that’s truly accurate. This creates “risk of inaccurate data” as respondents shoehorn themselves into answers that don’t truly fit.

Cultural and linguistic nuances may distort meaning, too. Cultural misinterpretation creeps in when certain options or phrasings mean different things to different groups. A “5” on a scale may mean perfection somewhere, and just above average elsewhere.

Let’s not forget survey fatigue. Closed-ended question after closed-ended question makes for one tedious experience. You risk “restricted answer sets” and participants rushing—leading to inaccurate or incomplete responses.

The thread that ties these downsides together is a kind of hidden risk in relying only on closed-ended questions: you may collect plenty of data, but much of it is shallow or skewed. In the sections that follow, we’ll see how each common question type brings its own unique flavor of limitation—and how to spot (and break out of) those ruts.

Closed-ended questions can restrict respondents from fully expressing their thoughts, leading to a lack of depth in understanding participant perspectives. (appinio.com)

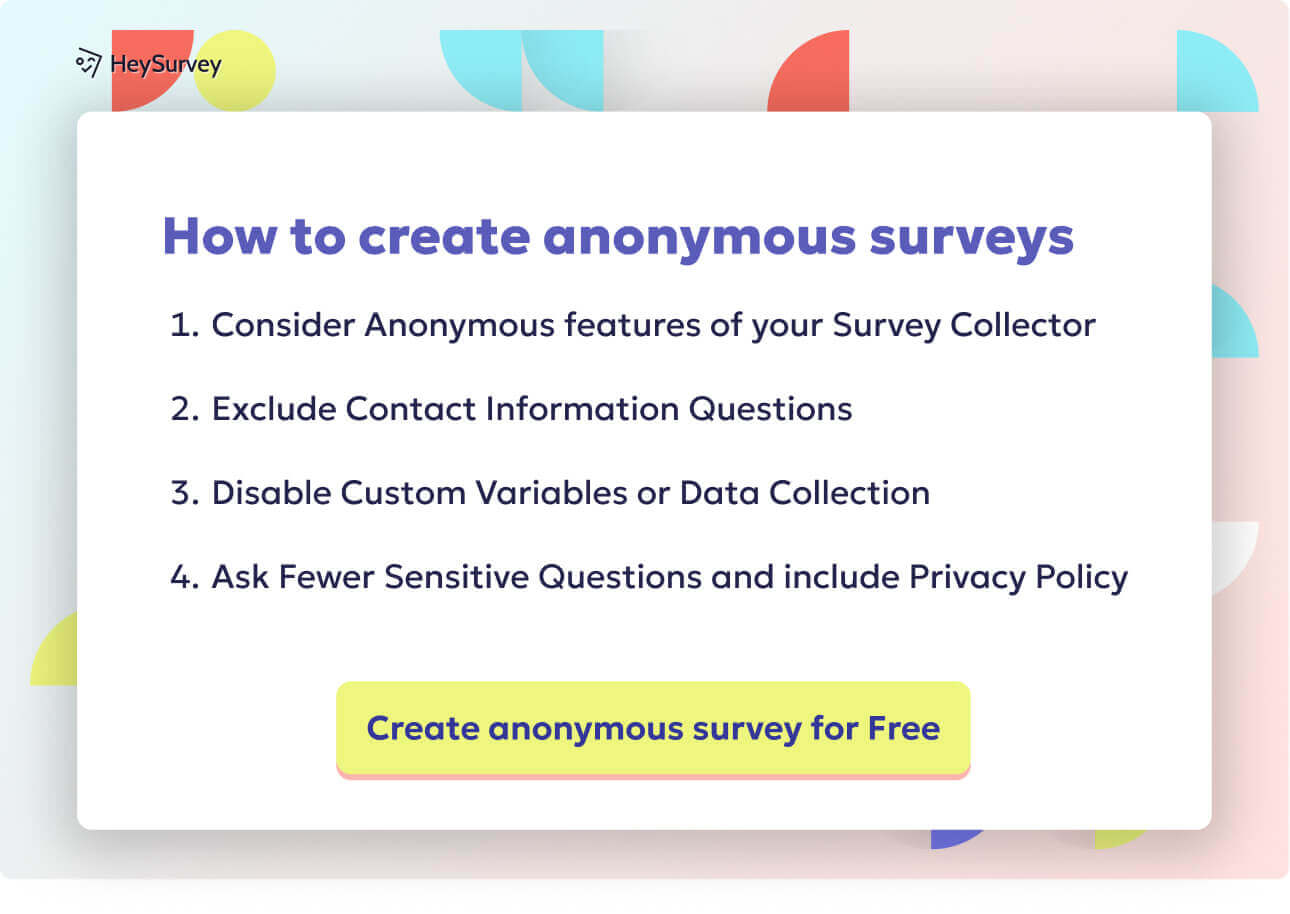

How to Create a Survey with HeySurvey in 3 Easy Steps

Step 1: Create a New Survey

Head over to HeySurvey and kick off your project by clicking “Create Survey”. You can either start with a blank canvas or choose from a variety of handy pre-built templates to save time (perfect if you’re new!). The template option is ideal for closed-ended question surveys since it already organizes question types like Likert scales, multiple-choice, and rating items for you.

Once you pick your start point, you’ll enter the Survey Editor where you can give your survey a clear internal name to keep things tidy.

Step 2: Add Questions

In the editor, hit “Add Question” to choose the type of question you want: Likert scale, yes/no, multiple-choice single or multiple answers, or numerical rating scales. Simply select the question style, type your question text, then plug in your answer options.

- Don’t forget to mark questions as required if you want to ensure responses.

- You can also add descriptions, images, or even upload GIFs to make your survey pop.

- Use the choice settings to decide if respondents can pick one or many answers, or let them add their own “Other” option.

Build your question flow in the order you want respondents to answer.

Step 3: Publish Your Survey

When you’re happy with your questions and the look of your survey, click the “Preview” button to see how it feels on desktop or mobile. Adjust anything that looks out of place.

Once satisfied, hit “Publish”. Note: you’ll need to create a free HeySurvey account to publish and collect responses. After publishing, you’ll get a shareable link to send out or embed in your website.

Bonus Steps to Take Your Survey to the Next Level

Apply Branding: Upload your company logo and customize colors and fonts in the Designer Sidebar to create a consistent look that matches your brand identity. Surveys look more professional and build trust this way.

Define Settings: Set your survey’s start and end dates, limit the number of responses, or add a redirect URL to send people to a thank-you page after submission. These options are found in the Settings Panel.

Skip & Branching Logic: Use HeySurvey’s branching feature to customize the respondent’s path. For example, based on a yes/no answer, you can skip irrelevant questions. It makes the experience smoother and data more relevant.

Ready to start? Click the button below to open a pre-made template tailored for closed-ended survey questions and begin exploring!

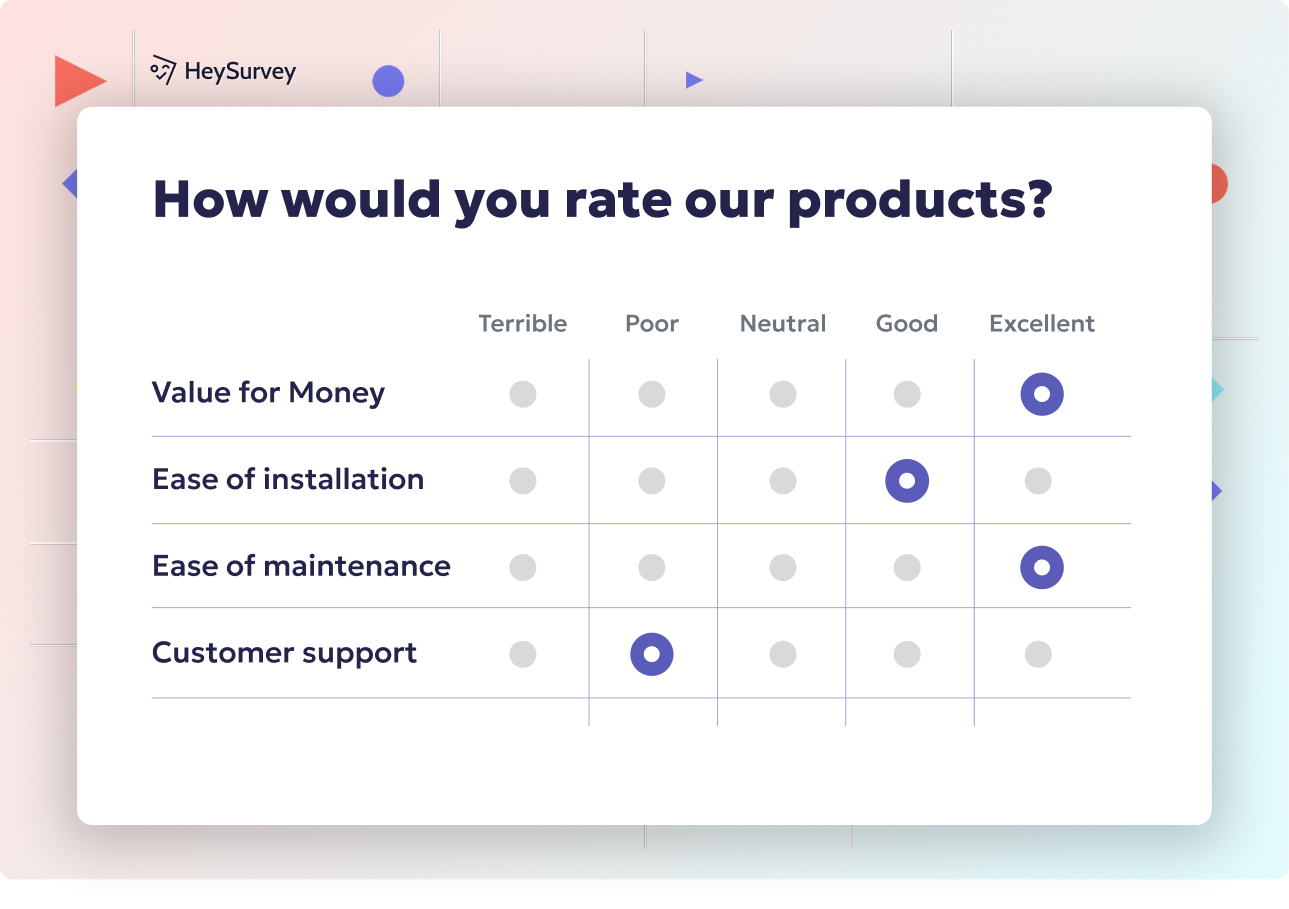

Likert Scale Questions: Drawbacks, Use Cases, & 5 Sample Items

Why & When to Use

Ah, the Likert scale—the king of “rate your agreement”. This trusty format pops up everywhere from employee satisfaction to software usability. It’s brilliant when you need fast, broad snapshots of sentiment, such as benchmarking changes over time, measuring attitudes, or comparing satisfaction across groups.

Some key uses include:

- Capturing customer impressions—“How satisfied are you?”

- Monitoring improvement—“Rate your agreement that support has gotten better”

- Comparing groups—“How much do salespeople love the new CRM?”

Likert scales shine when tracking incremental change or offering a bird’s-eye view of attitudes. They keep analysis easy (just tally those scores!) and ensure respondents can answer quickly, especially in mobile surveys.

Primary Disadvantages

But beware: Likert scales come with their own sneaky weaknesses. A key problem is the central-tendency bias, where people keep picking the middle option—not too hot, not too cold—often because they’re tired or uncertain. If you always get a pile of “neutral” answers, this could be why.

Acquiescence bias is next on the list. Sometimes, respondents will agree with statements just because it feels polite or easier than objecting, skewing your results towards consensus that isn’t real.

The limitations run deeper, though. Likert responses only yield ordinal data. That means “strongly agree” is more positive than “agree,” but you can’t assume the gap between them is equal. People treat numbers differently, making math tricks like averaging a bit misleading.

Midpoint misuse is another trap. That “neutral” or “neither agree nor disagree” can become a dumping ground for those confused by the question or those with no opinion.

So, next time your Likert data looks flat or confusing, these biases could be the culprit. Keeping these flaws in mind helps you interpret (and design) Likert items more wisely.

5 Sample Questions

How strongly do you agree that our checkout process was simple?

Rate your agreement: The onboarding tutorial met my expectations.

Please indicate how often you use feature X.

How satisfied are you with the clarity of our pricing?

How likely are you to recommend our webinar to a colleague?

Closed-ended questions, such as those in Likert scales, can lead to central-tendency bias, where respondents frequently select middle options, potentially skewing results. (en.wikipedia.org)

Dichotomous (Yes/No) Questions: Drawbacks, Use Cases, & 5 Sample Items

Why & When to Use

Nothing is more direct than a yes/no, or dichotomous question. It’s the go-to move for quick, definitive answers. You’ll find them everywhere—screening new hires, deciding if a customer hit a milestone, or creating branching logic that sends people down different survey paths.

The power lies in clarity. Dichotomous questions can:

- Make decisions fast, like “Did you complete the task?”

- Act as gatekeepers—“Are you over 18?”

- Clarify eligibility—“Do you have experience with our platform?”

If the answer truly is one or the other, you can’t beat the simplicity.

Primary Disadvantages

Yet this binary brilliance is also a double-edged sword. The biggest flaw is oversimplification. Real life is rarely yes or no, and most opinions live somewhere between. “Do you like chocolate?” leaves no space for “only dark chocolate” or “yes, but I’m allergic.”

There’s also no insight into intensity. A yes might mean “absolutely!” or “I guess, sometimes…”—but you’ll never know. This contributes to a high risk of misclassification as participants contort their nuanced feelings into black-or-white responses.

Additional hiccups arise when:

- Someone feels forced to answer “yes” just to move forward.

- There’s a social desirability effect—people answer to look good.

- Complex or sensitive questions become misleading soundbites.

So while dichotomous items have their place, over-reliance means losing valuable nuance—sometimes without realizing it.

5 Sample Questions

Have you purchased from us before?

Did the product arrive on time?

Would you sign up for a loyalty program?

Do you own a smart speaker?

Were all your questions answered today?

Multiple-Choice Single-Answer Questions: Drawbacks, Use Cases, & 5 Sample Items

Why & When to Use

Multiple-choice single-answer questions serve up quick, clear options. They’re incredibly useful when you need to sort respondents into neat categories for segmentation, target marketing, or easy statistical analysis.

Use cases where they excel include:

- Demographics—classifying by age, gender, income, location

- Primary motivations—discovering main goals for visiting a site

- Product/service choice—understanding most popular features or paths

Their efficiency makes them a fan favorite for both designers and respondents—especially when categories are clear-cut and mutually exclusive.

Primary Disadvantages

However, life is messier than any menu of options. A big concern is overlooked options—when the real answer is missing from the list. This causes either abandonment or inaccurate responses.

Order effects can skew results if certain answers are consistently listed at the top or bottom. People may choose what they see first, not what fits best.

The forced choice limitation means many respondents settle for the "closest fit," generating misfit respondents and muddying your results.

Other problems include:

- Categories overlapping, confusing survey takers.

- Lacking an “Other” or “None of the above,” which forces inaccurate answers.

- Failing to update answer sets as audiences evolve.

All these can lead to frustration and unreliable data—think a square peg in a round checkbox.

5 Sample Questions

Which age bracket describes you?

What is your primary reason for visiting our site today?

Which delivery speed did you choose?

Where did you first hear about us?

Which social network do you use most often?

Closed-ended questions can lead to measurement errors due to respondents' misinterpretation or limited response options, potentially compromising data validity. (en.wikipedia.org)

Multiple-Choice Multiple-Answer (Checkbox) Questions: Drawbacks, Use Cases, & 5 Sample Items

Why & When to Use

Checkbox or multiple-answer multiple-choice questions are the survey world’s way to say “Go wild, pick as many as you like!” They’re key when you need to capture the full range of actions, preferences, or experiences—no single answer will do.

You’ll see these shine for:

- Recording all features a customer uses ("Select all that apply")

- Listing every purchase motivator ("Pick the factors that mattered")

- Tallying up devices, subscriptions, or activities, since people are rarely limited to just one

When actual behavior is broad and varied, multiple answers yield more accurate, comprehensive data.

Primary Disadvantages

That freedom? It comes at a sneaky price. A favorite pitfall is over-reporting—respondents click “all that apply,” sometimes liberally or carelessly. This inflates your numbers and makes it look like everyone uses (and loves) every feature.

Analysis complexity goes up, too. Instead of nice, clean counts, you’re juggling overlaps, priorities, and combinations, which can tangle even simple analyses.

Another issue is unclear priority order. Did respondents check their most important preferences first, or in random order? You won’t know. Then there’s tick-all bias, where some people tick every available box just to finish the survey faster.

Other classic problems include:

- Misreading “Select all that apply” as “Select the best option”

- Failing to clarify if certain selections are mutually exclusive

- No easy way to capture most vs. least important answers

So while checkboxes seem thorough, they often leave you with more questions than you started.

5 Sample Questions

Which features do you rely on weekly? (Select all that apply)

Which factors influenced your purchase? (Select all that apply)

Which devices do you own?

Which of the following newsletters do you subscribe to?

What payment methods have you used with us?

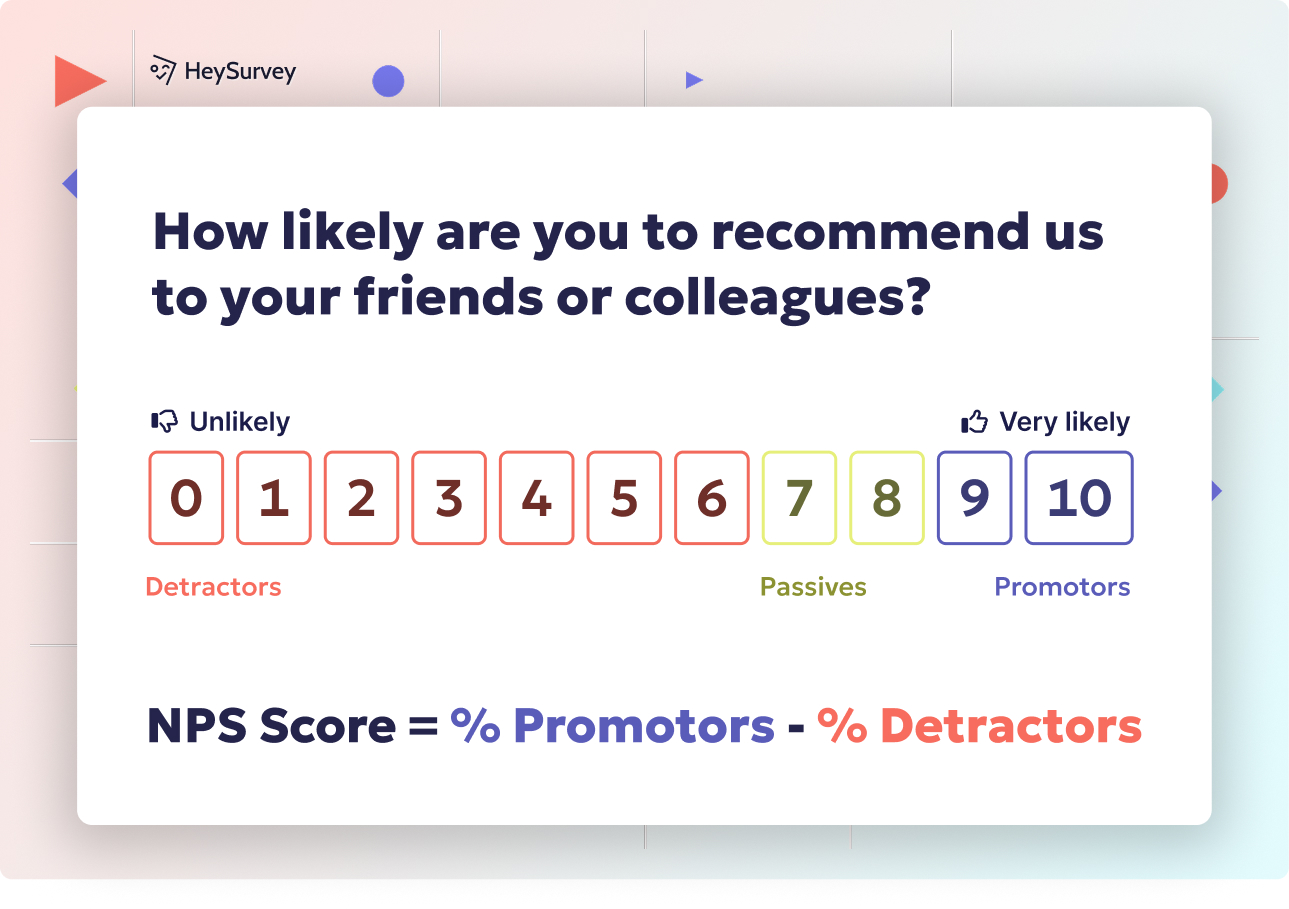

Numerical Rating Scale (0–10) Questions: Drawbacks, Use Cases, & 5 Sample Items

Why & When to Use

When it’s time to measure performance in a quantifiable way, numerical rating scales—like “Rate us from 0 to 10”—come to the rescue. They’re heavily favored for Net Promoter Score (NPS) surveys, customer satisfaction, and product ratings.

Why? Because:

- Numeric scores are easy to chart, trend, and compare.

- Companies love a crisp number to track over time or benchmark success.

- Respondents can express how satisfied, likely, or impressed they are—at least numerically!

It’s great for situations where management wants regular, trackable feedback that fits neatly into a spreadsheet.

Primary Disadvantages

But don’t be fooled by the siren song of digits. Cultural differences in number interpretation mean that in some countries, an “8” is outstanding, while in others, it’s just average. This creates huge headaches in global surveys.

Anchor bias means that people’s ratings shift depending on the examples provided or the context—show them only “bad” reviews and they’ll rate higher by comparison.

There’s also the illusion of precision. A “7” versus an “8”—is it really a meaningful difference, or just a gut feeling on a random day? Treating these as meaningful intervals leads to spurious accuracy.

Other worries include:

- Fatigue from overuse (“Rate everything!” syndrome)

- Misinterpretation of what the numbers truly mean

- Pressure to “grade” harshly/leniently based on recent experiences

So even with numbers, the data may look neat but be messier than you think.

5 Sample Questions

On a scale of 0–10, how satisfied are you with our support?

Rate the difficulty of completing today’s task.

How likely are you to renew your subscription?

Please rate the overall quality of our app.

How well did the webinar meet your expectations?

Dos and Don’ts for Mitigating Closed-Ended Question Pitfalls

Even with their flaws, closed-ended questions remain essential tools. But savvy survey creators know how to dodge the biggest traps.

Some best practices for closed-ended surveys you’ll want to remember:

Do combine closed- and open-ended items to validate findings and explore “why” behind the numbers.

Do pilot test your question wording and answer sets. Ask a small group if anything’s confusing or missing.

Do randomize or rotate your answer options (when appropriate) to beat order effects and get truer results.

On the other hand, the “don’ts” are just as important:

Don’t overload surveys with too many closed items, or you’ll see quality drop as survey fatigue hits hard.

Don’t assume that numerical scales are true “interval” data. Analyze responses for what they are—usually ordinal, not finely graduated scores.

When you’re mindful of how to avoid closed question bias, you’ll unlock the strengths of closed-ended formats without getting tripped up by their weaknesses. Even small tweaks lead to much stronger and more actionable data.

Final Thoughts & Next Steps

Closed-ended questions are a survey designer’s power tools: fast, scalable, and easy to analyze. But these tools come with inherent limits that can shrink rich experiences down to a handful of clicks. The smartest research blends these efficient formats with open-ended questions and qualitative insights. Ready to step up your survey game? Download our question template or read a related guide for next-level strategies—and keep building those surveys that truly listen.

Related Question Design Surveys

29 Quantitative Survey Research Questions Example for Success

Explore 25+ quantitative survey research questions example with clear explanations and tips for c...

32 Good Survey Question to Boost Your Data Quality

Discover how to craft good survey questions with 30 sample questions across 8 types for better da...

31 Survey Question Mistakes You Need to Avoid Today

Discover 25 common survey questions mistakes with real examples and expert tips to craft clear, u...